By now you’ve probably heard of ESG (Environmental, Social, Governance) ratings for companies, or ratings for their carbon footprint. Well, now a UK company has come up with a way of rating the ‘ethics’ social media companies.

EthicsGrade is an ESG ratings agency, focusing on AI governance. Headed up Charles Radclyffe, the former head of AI at Fidelity, it uses AI-driven models to create a more complete picture of the ESG of organizations, harnessing Natural Language Processing to automate the analysis of huge data sets. This includes tracking controversial topics, and public statements.

Frustrated with the green-washing of some ‘environmental’ stocks, Radclyffe realized that the AI governance of social media companies was not being properly considered, despite presenting an enormous risk to investors in the wake of such scandals as the manipulation of Facebook by companies such as Cambridge Analytica during the US Election and the UK’s Brexit referendum.

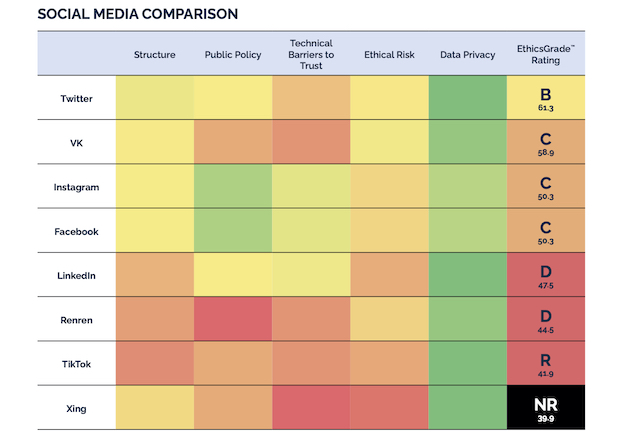

EthicsGrade Industry Summary Scorecard – Social Media

The idea is that these ratings are used by companies to better see where they should improve. But the twist is that asset managers can also see where the risks of AI might lie.

Speaking to TechCrunch he said: “While at Fidelity I got a reputation within the firm for being the go-to person, for my colleagues in the investment team, who wanted to understand the risks within the technology firms that we were investing in. After being asked a number of times about some dodgy facial recognition company or a social media platform, I realized there was actually a massive absence of data around this stuff as opposed to anecdotal evidence.”

He says that when he left Fidelity he decided EthicsGrade would out to cover not just ESGs but also AI ethics for platforms that are driven by algorithms.

He told me: “We’ve built a model to analyze technology governance. We’ve covered 20 industries. So most of what we’ve published so far has been non-tech companies because these are risks that are inherent in many other industries, other than simply social media or big tech. But over the next couple of weeks, we’re going live with our data on things which are directly related to tech, starting with social media.”

Essentially, what they are doing is a big parallel with what is being done in the ESG space.

“The question we want to be able to answer is how does Tik Tok compare against Twitter or Wechat as against WhatsApp. And what we’ve essentially found is that things like GDPR have done a lot of good in terms of raising the bar on questions like data privacy and data governance. But in a lot of the other areas that we cover, such as ethical risk or a firm’s approach to public policy, are indeed technical questions about risk management,” says Radclyffe.

But, of course, they are effectively rating algorithms. Are the ratings they are giving the social platforms themselves derived from algorithms? EthicsGrade says they are training their own AI through NLP as they go so that they can automate what is currently very human analysts centric, just as ‘sustainalytics’ et al did years ago in the environmental arena.

So how are they coming up with these ratings? EthicsGrade says are evaluating “the extent to which organizations implement transparent and democratic values, ensure informed consent and risk management protocols, and establish a positive environment for error and improvement.” And this is all achieved, they say, all through publicly available data – policy, website, lobbying etc. In simple terms, they rate the governance of the AI not necessarily the algorithms themselves but what checks and balances are in place to ensure that the outcomes and inputs are ethical and managed.

“Our goal really is to target asset owners and asset managers,” says Radclyffe. “So if you look at any of these firms like, let’s say Twitter, 29% of Twitter is owned by five organizations: it’s Vanguard, Morgan Stanley, Blackrock, State Street and ClearBridge. If you look at the ownership structure of Facebook or Microsoft, it’s the same firms: Fidelity, Vanguard and BlackRock. And so really we only need to win a couple of hearts and minds, we just need to convince the asset owners and the asset managers that questions like the ones journalists have been asking for years are pertinent and relevant to their portfolios and that’s really how we’re planning to make our impact.”

Asked if they look at content of things like Tweets, he said no: “We don’t look at content. What we concern ourselves is how they govern their technology, and where we can find evidence of that. So what we do is we write to each firm with our rating, with our assessment of them. We make it very clear that it’s based on publicly available data. And then we invite them to complete a survey. Essentially, that survey helps us validate data of these firms. Microsoft is the only one that’s completed the survey.”

Ideally, firms will “verify the information, that they’ve got a particular process in place to make sure that things are well-managed and their algorithms don’t become discriminatory.”

In an age increasingly driven by algorithms, it will be interesting to see if this idea of rating them for risk takes off, especially amongst asset managers.