Last CES was a time of reckoning for lidar companies, many of which were cratering due to a lack of demand from a (still) non-existent autonomous vehicle industry. The few that excelled did so by specializing, and this year the trend has pushed beyond lidar, with new sensing and imaging methods pushing to both compete with and complement the laser-based tech.

Lidar pushed ahead of traditional cameras because it could do things they couldn’t — and now some companies are pushing to do the same with tech that’s a little less exotic.

A good example of addressing the problem or perception by different means is Eye Net’s vehicle-to-x tracking platform. This is one of those techs that’s been talked about in the context of 5G (admittedly still somewhat exotic), which for all the hype really does enable short-distance, low-latency applications that could be life-savers.

Eye Net provides collision warnings between vehicles equipped with its tech, whether they have cameras or other sensing tech equipped or not. The example they provide is a car driving through a parking lot, unaware that a person on one of those horribly unsafe electric scooters is moving perpendicular to it ahead, about to zoom into its path but totally obscured by parked cars. Eye Net’s sensors detect the position of the devices on both vehicles and send warnings in time for either or both to brake.

They’re not the only ones attempting something like this, but they hope that by providing a sort of white-label solution, a good size network can be built relatively easily, instead of having none, and then all VWs equipped, and then some Fords and some e-bikes, and so on.

But vision is still going to be a major part of how vehicles navigate, and advances are being made on multiple fronts.

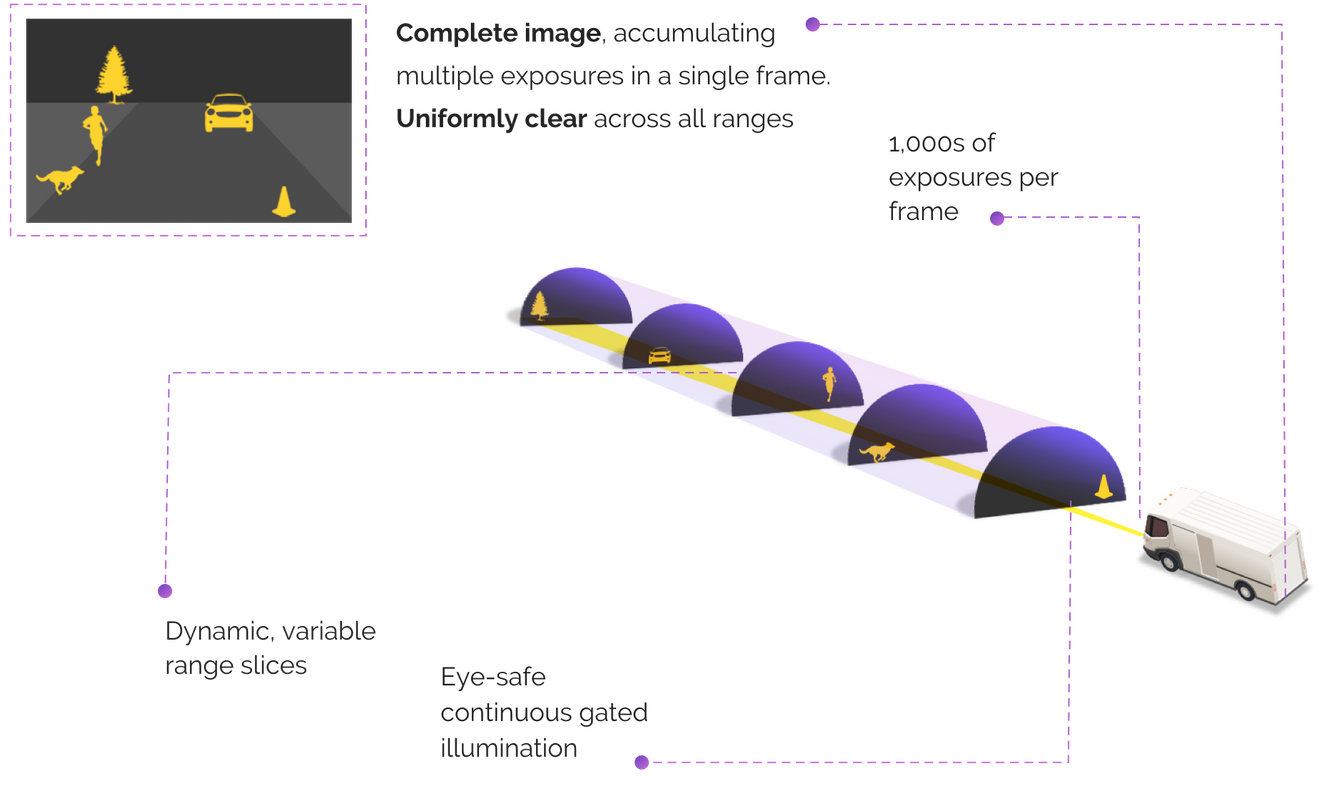

Brightway Vision, for instance, addresses the issue of normal RGB cameras having limited visibility in many real-world conditions by going multispectral. In addition to ordinary visible-light imagery, the company’s camera is mated to a near-infrared beamer that scans the road ahead at set distance intervals many times a second.

The idea is that if the main camera can’t see 100 feet out because of fog, the NIR imagery will still catch any obstacles or road features when it scans that “slice” in its regular sweep of the incoming area. It combines the benefits of traditional cameras with those of IR ones, but manages to avoid the shortcomings of both. The pitch is that there’s no reason to use a normal camera when you can use one of these, which does the same job better and may even allow another sensor to be cut out.

Foresight Automotive also uses multispectral imagery in its cameras (chances are hardly any vehicle camera will be limited to visible spectrum in a few years), dipping into thermal via a partnership with FLIR, but what it’s really selling is something else.

To provide 360-degree (or close) coverage, generally multiple cameras are required. But where those cameras go differs on a compact sedan versus an SUV from the same manufacturer — let alone on an autonomous freight vehicle. Because those cameras have to work together, they need to be perfectly calibrated, aware of the exact position of the others, so they know, for example, that they’re both looking at the same tree or bicyclist and not two identical ones.

Foresight’s advance is to simplify the calibration stage, so a manufacturer or designer or test platform doesn’t need to be laboriously re-tested and certified every time the cameras need to be moved half an inch in one direction or the other. The Foresight demo shows them sticking the cameras on the roof of the car seconds before driving it.

It has parallels to another startup called Nodar that also relies on stereoscopic cameras, but takes a different approach. The technique of deriving depth from binocular triangulation, as the company points out, goes back decades, or millions of years if you count our own vision system, which works in a similar ways. The limitation that has held this approach back isn’t that optical cameras fundamentally can’t provide the depth information needed by an autonomous vehicle, but that they can’t be trusted to remain calibrated.

Nodar shows that its paired stereo cameras don’t even need to be mounted to the main mass of the car, which would reduce jitter and fractional mismatches between the cameras’ views. Attached to the rear view mirrors, their “Hammerhead” camera setup has a wide stance (like the shark’s), which provides improved accuracy because of the larger disparity between the cameras. Since distance is determined by the differences between the two images, there’s no need for object recognition or complex machine learning to say “this is a shape, probably a car, probably about this big, which means it’s probably about this far away” as you might with a single camera solution.

“The industry has already shown that camera arrays do well in harsh weather conditions, just as human eyes do,” said Nodar COO and co-founder Brad Rosen. “For example, engineers at Daimler have published results showing that current stereoscopic approaches provide significantly more stable depth estimates than monocular methods and LiDAR completion in adverse weather. The beauty of our approach is that the hardware we use is available today, in automotive-grade, and with many choices for manufacturers and distributors.”

Indeed, a major strike against lidar has been the cost of the unit — even “inexpensive” ones tend to be orders of magnitude more expensive than ordinary cameras, something that adds up very quickly. But team lidar hasn’t been standing still either.

Sense Photonics came onto the scene with a new approach that seemed to combine the best of both worlds: a relatively cheap and simple flash lidar (as opposed to spinning or scanning, which tend to add complexity) mated to a traditional camera so that the two see versions of the same image, allowing them to work together in identifying objects and establishing distances.

Since its debut in 2019 Sense has refined its tech for production and beyond. The latest advance is custom hardware that has enabled it to image objects out to 200 meters — generally considered on the far end both for lidar and traditional cameras.

“In the past, we have sourced an off-the-shelf detector to pair with our laser source (Sense Illuminator). However, our 2 years of in-house detector development has now completed and is a huge success, which allows us to build short-range and long-range automotive products,” said CEO Shauna McIntyre.

“Sense has created ‘building blocks’ for a camera-like LiDAR design that can be paired with different sets of optics to achieve different FOV, range, resolution, etc,” she continued. “And we’ve done so in a very simple design that can actually be manufactured in large volumes. You can think of our architecture like a DSLR camera where you have the ‘base camera’ and can pair it with a macro lens, zoom lens, fisheye lens, etc. to achieve different functions.”

One thing all the companies seemed to agree on is that no single sensing modality will dominate the industry from top to bottom. Leaving aside that the needs of a fully autonomous (i.e. level 4-5) vehicle has very different needs from a driver assist system, the field moves too quickly for any one approach to remain on top for long.

“AV companies cannot succeed if the public is not convinced that their platform is safe and the safety margins only increase with redundant sensor modalities operating at different wavelengths,” said McIntyre.

Whether that means visible light, near-infrared, thermal imaging, radar, lidar, or as we’ve seen here, some combination of two or three of these, it’s clear the market will continue to favor differentiation — though as with the boom-bust cycle seen in the lidar industry a few years back, it’s also a warning that consolidation won’t be far behind.

Read the original post: Startups look beyond lidar for autonomous vehicle perception

Organize your team with Milanote.

Enjoy relaxed ambient music byTPV Media.